How can technical systems be developed in a way that captures human movements while respecting privacy? Professor Dr Helge Rhodin from the Faculty of Technology at Bielefeld University is working on this. The computer scientist heads the Visual AI for Extended Reality research group and, together with his team, is developing algorithms that make the interaction between humans and technology more reliable and safer.

Where do we stand in the development of generative visual artificial intelligence?

Helge Rhodin: We are impressively advanced – at least as far as the results are concerned. We currently see, for example, that AI can generate images in the style of famous artists. But this often goes unnoticed: The creativity still comes strongly from humans, the style is only transferred, not created. In the context of the concern that AI will replace humans, this is a very reassuring thought.

© Bielefeld University

You are researching at the interface between computer vision and graphics – what exactly are you working on?

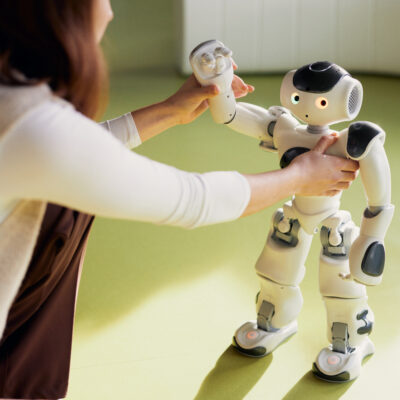

Helge Rhodin: For me, Extended Reality, XR, is the big picture. Machines should learn to ‘understand’ the real world – especially people and their movements. To do this, we combine imaging processes and computer vision: in other words, on the one hand, how information can be displayed realistically and, on the other, how machines can reconstruct what is happening from camera images. Our aim is to develop simple solutions that are suitable for everyday use. For example, algorithms that work with just a small camera and recognise movements in real time. This is particularly crucial for applications for virtual or augmented reality, i.e. VR or AR, because no complex sensor technology can be used there. We want to enable natural interaction in XR applications – without any controllers or complex technology. The technology should adapt to people – not the other way round.

How does your work at CITEC contribute to this?

Helge Rhodin: In my Visual AI for Extended Reality group, we combine computer vision, machine learning, computer graphics and augmented reality. We conduct basic research, but always with a view to specific future applications. The latest developments in machine learning and XR devices are opening up completely new possibilities: Video analyses are becoming ever more precise and digital representations ever more realistic. And we bring the two together.

What could such an application look like?

Helge Rhodin: These can be everyday interactions. For example, a computer that understands what I need in certain situations. When I’m getting dressed in the morning, for example, the system recognises that I’m standing in front of the wardrobe and hesitating a little, and automatically displays the weather forecast without me having to ask. It could be a pair of glasses or another intelligent assistance system. But it needs to understand the environment I’m in – and what I’m doing.

This takes us into a very private area of life. You are also working on making visual AI safer.

Helge Rhodin: Especially when it comes to reconstructing people, there is potential for abuse. In authoritarian regimes such as China or Russia, we can already see how facial recognition is used to monitor people. That’s why it’s important to create alternatives: systems that only record what happens without identifying people. We use alternative detection methods such as coded optics or ultrasound to better protect privacy in smart home applications, for example. In one project, we are working with ultrasonic sensors that recognise whether someone has fallen – without providing a detailed image or identifying the person. We want to create alternatives to problematic applications so that it is also legally possible to say: in such cases, a classic camera is not needed, but more data protection-friendly alternatives are sufficient.

Where do you see the most important fields of application for your research?

Helge Rhodin: The areas of medicine and sport are very promising. For example, we have worked with professional skiers to better analyse their movement sequences in order to prevent injuries. No disruptive sensors can be used here because they would change the behaviour. Our video-based methods can help to simulate forces on joints and optimise movements. In the field of health, we have a project with Canadian researchers at the University of British Columbia to enable medical diagnostics even in very remote regions. Using augmented reality, laypersons can perform ultrasound examinations remotely with the guidance of medical professionals. The same applies to the industrial sector: tradespeople could be shown exactly what needs to be done when dismantling a machine. The technology provides step-by-step instructions directly in the field of vision without having to consult a manual.

You have conducted research in Switzerland and Canada and are now involved in the university’s focus area FAITH – ‘Foundations and Implications of Human-AI Teamwork’. FAITH investigates how humans and AI can work together effectively in hybrid teams. How does this tie in with your previous work?

Helge Rhodin: This brings me back to my motivation: machines need a better visual understanding. Visual communication is essential for hybrid teams with humans, robots and AI assistants. Research in Bielefeld is very interdisciplinary, and my research with visual AI has many other points of contact. I have also worked in the field of neuroscience, which is also strongly represented in Bielefeld. It’s not about reconstructing people, but about animals – their movements are analysed and quantified in order to understand what exactly the animal is doing in a particular experiment. Much of my previous work can be perfectly linked to the topics in Bielefeld. Our computer vision solutions thus become a tool for finding new insights in other fields of research.

© Seventyfour/stock.adobe.com

Transparency notice: This translation was created with machine assistance and subsequently edited.