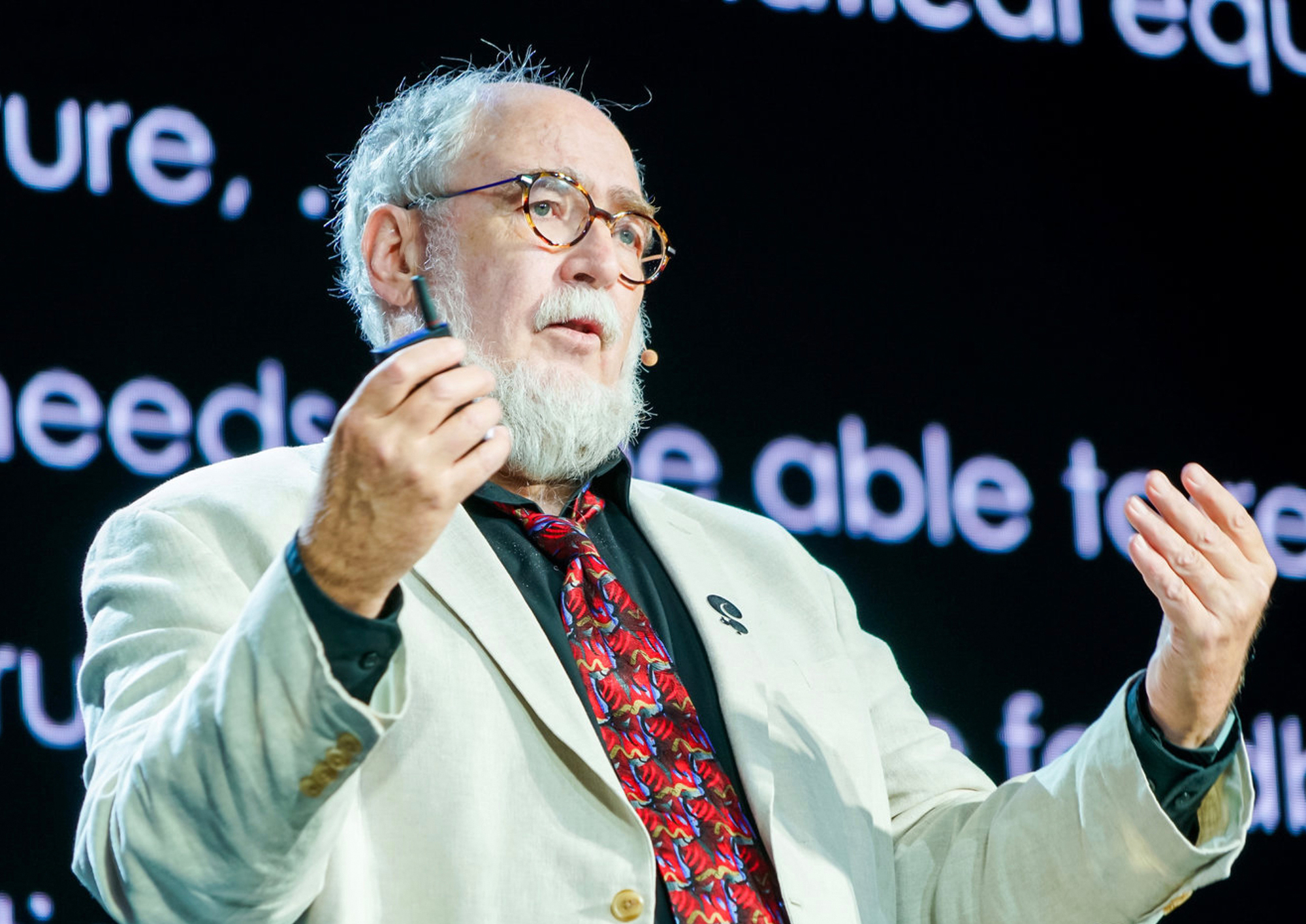

Artificial intelligence (AI) should be used in partnership with human beings rather than merely serving as a tool: this vision is at the center of Professor Dr. Kenneth D. Forbus’ research. Guided by this aim, the American computer scientist from Northwestern University in Illinois, USA, hopes that such an approach will ‘revolutionise the way AI systems are built and used.’ Today (19 January), he will deliver a talk on his research at 4pm. The talk is part of the new lecture series ‘Co-Constructing Intelligence,’ a joint event organised by Bielefeld University, Paderborn University, and the University of Bremen. This English-language online talk is open to participants free of charge.

When humans and machines interact, their distinct ways of interpreting the world can lead to misunderstandings. Humans are accustomed to interpreting the things that they perceive, even with little prior knowledge. Technical systems based on AI, on the other hand, generally have trouble dealing with individual cases, as such systems need a multitude of examples and data in order to meaningfully interpret phenomena in their environment. ‘While there has been substantial progress in artificial intelligence, we are still far away from systems that can learn incrementally from small amounts of data while producing results that are understandable by human partners,’ explains Kenneth D. Forbus.

©

More human-Like AI systems are the goal

Kenneth D. Forbus, a computer scientist and cognitive scientist, works with qualitative representations – a type of thinking based not on numbers but on a set of more abstract, symbolic data. Another keyword in his research is analogical learning, in which humans draw comparisons by applying the system of relationships they observe in one phenomenon to another phenomenon. Forbus posits that qualitative representations and analogical learning are central to human understanding. He believes that these ideas should ‘provide the basis for new technologies that will help us create more human-like AI systems.’

Understanding the collective understanding of the world

In his talk, Forbus illustrates the principles of human thought, using examples from the fields of vision, language, and reasoning. He also looks at how these principles can be leveraged to create ‘social software organisms’ – that is, AI at the level of the human.

His talk, which kicks off the new lecture series ‘Co-Constructing Intelligence,’ is entitled ‘Qualitative Representations and Analogical Learning for Human-like AI Systems.’ The term ‘co-construction’ refers to the concept that interpreting the environment and performing actions take place collaboratively. This happens organically, for instance, when children do household chores with guidance from their parents. Such natural types of interaction, however, have not yet been possible with service robots and other technical systems. ‘Kenneth D. Forbus is one of the pioneers in the field of AI research,’ says Professor Dr. Philipp Cimiano, who heads the Semantic Computing Group at Bielefeld University. ‘His approach has generated significant momentum for self-learning AI systems that provide meaningful information and explain their interpretations in ways that humans can understand – despite having less data.’

Lecture series born of research initiative

Bielefeld University, the University of Bremen, and Paderborn University are working together on the new lecture series, which was organised by Cimiano together with Dr.-Ing. Britta Wrede, a computer scientist at Bielefeld University, Professor Dr. Michael Beetz, a computer scientist at the University of Bremen, and Professor Dr. Katharina Rohlfing, a linguist at Paderborn University. The lecture series emerged from a joint research initiative among these three universities. Their work together is guided by the principle of co-construction with the aim of aligning the understanding and capabilities of robots with those of humans. The researchers are thus pursuing the vision of flexible and meaningful interaction between robots and humans in daily life. This type of interaction should help robots to perform tasks devised by humans, even if these tasks are vaguely formulated or the robot still has to learn the necessary steps, but also in situations where certain actions are only possible in cooperation with humans or the environment where the task being performed changes.