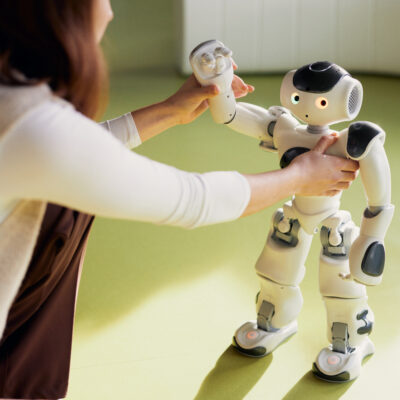

Is this the future of dialogue? The use and impact of chat GPT technologies in society is the subject of controversial debate. While some praise this development as groundbreaking, others see potential risks. In a new series of public talks organised by the Transregio ‘Constructing Explainability’ (TRR 318), two experts will present their research on this highly regarded topic. An interdisciplinary team is working on the principles, mechanisms and social practices of explanation in the Collaborative Research Centre at Paderborn University and Bielefeld University. The findings are to be taken into account in the design of AI systems and contribute to making AI understandable.

Anyone interested can take part in the two lectures online as an audience member: Prof. Dr Isabel Steinhardt will present her research on Wednesday, 29 May on the topic of ‘Digital divide in higher education also through AI?’. Prof. Dr Hendrik Buschmeier will focus on trust and mistrust in dealing with AI chatbots in his lecture on Wednesday, 26 June. Interested parties are invited to join the one-hour events via Zoom at 5 pm. Registration is not required.

©

‘My colleagues’ research responds to rapid technological developments and deals with skills that are relevant for dealing with AI,’ says Prof Dr Katharina Rohlfing, Professor of Psycholinguistics at Paderborn University and spokesperson for TRR 318. “Since an explanation is always also an exchange of knowledge and relies on a wide range of skills, we are pleased to get to know these expert perspectives,” Rohlfing continues.

What is the impact on the use of Chat GPT technologies?

The arrival of algorithmic applications and AI tools at universities, particularly through ChatGPT, has opened up a range of uses for students, from brainstorming to writing and research. However, Steinhardt emphasises: ‘The use of these tools is strongly influenced by social factors, which leads to differences in use, acceptance and competence.’

This phenomenon is analysed using the concept of the digital divide, which is based on three assumptions: an unequal distribution of access to the internet and other information and communication technologies (ICT), unequal use and the associated unequal knowledge and skills in using the technology and, finally, the resulting social differences. In her analysis, Steinhardt focusses on two levels: access to AI tools and their use by students. The data is based on a developed questionnaire and is supplemented by a comprehensive literature study on the topic.

Dynamics of trust and mistrust in the interaction with AI chatbotsHow do we deal with the trust and mistrust we feel towards AI chatbots? This topic is at the centre of Buschmeier’s expert lecture, which takes an in-depth look at the facets of the human-technology relationship. Four questions on trust and mistrust in the use of AI chatbots such as ChatGPT and other generative AI applications will be addressed. Firstly, it looks at why users should be suspicious of AI chatbots. In addition, Buschmeier focuses on the role that trust plays when interacting with this technology. It also looks at the extent to which it can be explained that users (nevertheless) trust AI chatbots. Ultimately, these considerations lead to the question of how awareness of healthy mistrust can be raised when dealing with AI chatbots.

The Transregio 318

The interdisciplinary research programme entitled ‘Constructing Explainability’ goes beyond the question of the explainability of AI as a basis for algorithmic decision-making. The approach promotes the active participation of people in socio-technical systems. The aim is to improve human-machine interaction, to focus on the understanding of algorithms and to investigate this as the product of a multimodal explanation process. The German Research Foundation (DFG) is providing around 14 million euros in funding until July 2025.

Further information is available at www.trr318.de.

Zoom link to participate in the lectures:

https://uni-paderborn-de.zoom.us/j/92376521281?pwd=ZWkvckQ3THhLejJ0K1hzSGlZZUQvdz09

This text has been translated automatically.