After breakfast, the dishes are cleared away: the empty milk carton is thrown out, the dirty plates are put in the dishwasher. This routine is second nature to humans. What if we could also teach robots these manual skills, just like parents teach their children? A group of researchers from the universities of Bielefeld, Paderborn, and Bremen want to radically transform the interaction between humans and machines and teach robots knowledge and manual skills in a natural way.

Until now, machines have been programmed mainly to perform a single task in factories and production facilities. Admittedly, there are also robots that do more complex things. They are fed with an extremely large amount of data and are completely pre-programmed in laboratories; but here, too, the scope of the tasks is defined clearly in advance. The researchers’ goal goes beyond this. They want to break new ground in the interaction between humans and robots, making it possible for robots to accomplish completely new tasks in interaction with human users.

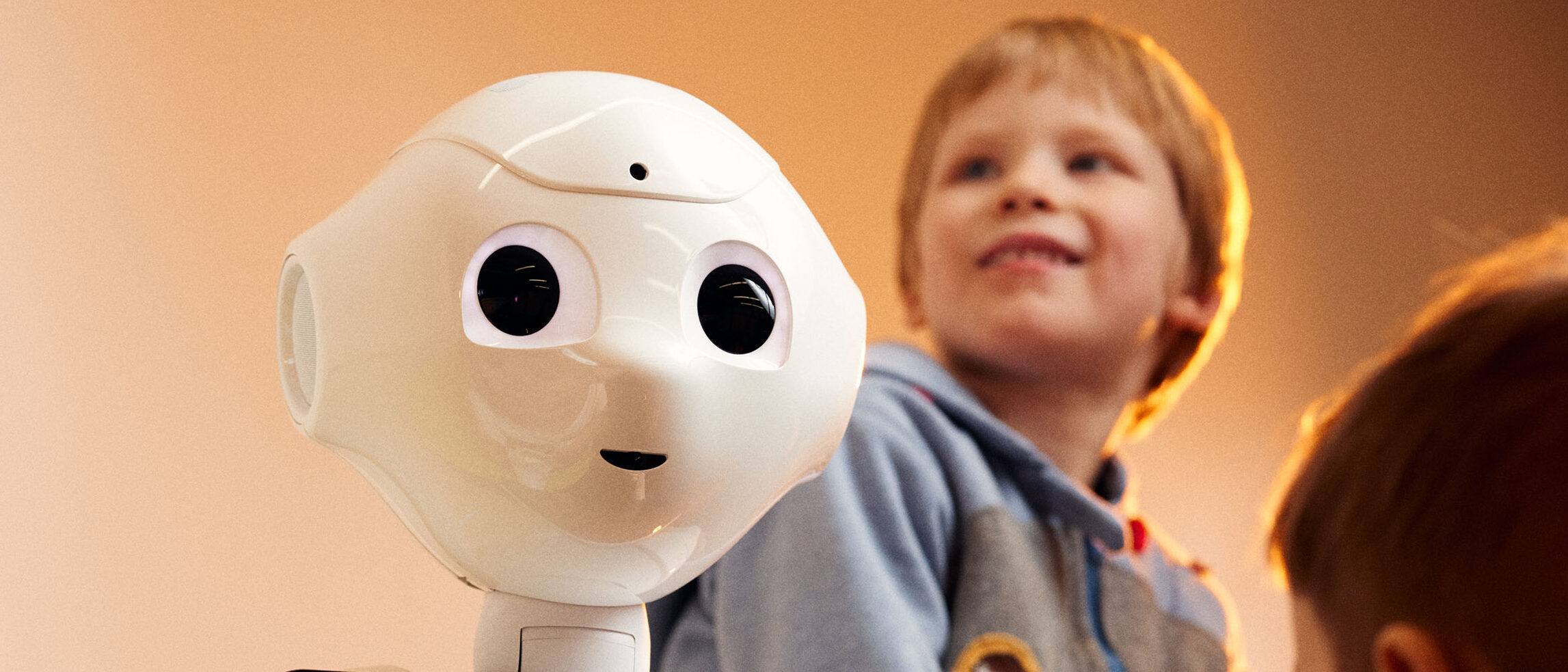

© Patrick Pollmeier

Artificial neural networks—more powerful than the human brain

This flexibility is a typical feature of computer programmes based on artificial intelligence (AI). Today, such programmes already perform complex tasks that sometimes exceed the capacities of the human brain. Deep learning technology, which enables data-based predictions with artificial neural networks, is an example of this. It allows AI to generate problem solutions that would not have occurred to human experts.

One drawback to using this technology, however, is that an AI does not provide a rationale that is comprehensible to humans as to why a particular prediction has been made. This makes dialogue difficult, because the AI is often unable to provide meaningful answers to queries. It also poses a significant problem when it comes to transferring AI technology to robotics. So how can an AI-assisted robot learn to adapt to the very personal requirements of users? The researchers’ answer is: like a human being, by means of co-construction—that is, learning by working together.

Collaborations provide excellent setups

© Bielefeld University/Michael Adamski

‘Human–robot research is pioneering, but in academia, co-construction is not given enough consideration as a guiding principle in robotics,’ says Professor Dr Philipp Cimiano, spokesperson for Bielefeld University’s Centre for Cognitive Interaction Technology (CITEC). He is developing the new research approach together with computer scientist Professor Dr.-Ing. Britta Wrede from Bielefeld University, psycholinguist Professor Dr Katharina Rohlfing, and computer scientist Professor Dr Axel-Cyrille Ngonga Ngomo from Paderborn University as well as computer scientists Professor Dr Michael Beetz and Professor Dr Tanja Schultz from the University of Bremen. The initiative is expanding the current interdisciplinary collaborations of the three universities and consolidating them in the new CoAI centre. CoAI stands for Cooperative and Cognition-enabled Artificial Intelligence.

In the Collaborative Research Centre/Transregio ‘Constructing Explainability’ (SFB/TRR 318), researchers from Bielefeld and Paderborn are already investigating the cooperative practices of explaining and how these can be taken into account when designing AI systems. At the same time, researchers at the University of Bremen are conducting studies in the Collaborative Research Centre ‘Everyday Activity in Science and Engineering’ (EASE, SFB 1320) on the skills required for robots to understand their environment and their own actions, and to make appropriate decisions based on this understanding. Researchers from Bielefeld University are involved in a subproject of EASE.

When breakfast becomes science

© Bielefeld University/Michael Adamski

Interdisciplinarity is key, says Britta Wrede, co-leader of project Ö (public relations) in SFB/TRR 318. She explains how the research focuses complement one another: ‘The team in Bremen have robots that have highly complex architectures. They interact with the researchers on site, but not yet with normal users. Bielefeld robots such as Pepper interact with humans, but so far, they cannot perform any sophisticated operations. Paderborn, on the other hand, is home to experts on the principle of co-construction, who will now apply their knowledge of human interaction to robots as well.’

Researchers from computer science, robotics, linguistics, psycholinguistics, psychology, philosophy, and cognitive sciences collaborate in the three-university team. A morning kitchen scenario presents the team in the lab with a special field of research: ‘There are variations for each everyday action because we all set the table differently or have our own personal preferences,’ says Philipp Cimiano. ‘We are interested in precisely these actions that involve flexibility,’ says Cimiano. This interaction between human and machine is an example of where AI can be deployed usefully—especially if it also masters the method of co-construction. The aim of the CoAI Centre is now to develop a common convergent research approach that makes it possible to use co-construction across disciplinary boundaries.

Co-construction: creating something together

© Bielefeld University/Susanne Freitag

In order for a robot to one day be able to prepare a breakfast egg in the desired way, the researchers are analysing how exactly humans learn skills. As an educational approach, co-construction means learning through collaboration. For psycholinguist Rohlfing, this interaction is not a specific instance, but a process: ‘Signals are constantly sent back and forth and involve continuous adaptation to the other person. This adaptation creates something new between two people that was not there before.’ This is how people understand and learn.

We are familiar with co-construction from human development. When adults teach children something, they use the method of scaffolding: ‘Adults take over the role of the child in some places so that the child can complete their part in other places and learn by doing so. Gradually, this framework of assistance is phased out,’ explains Rohlfing. The researchers are specifically looking at how people teach other people in a kitchen scenario: how does this work with pouring, stirring, and cutting? ‘It is done by showing, demonstrating, and presenting. For AI-assisted robots to use this principle and learn manual skills, they need to be made sensitive to such assistance strategies.’

New representations in robotic architecture

© University of Bremen

Understanding human cognitive abilities is also fundamental for computer scientists. ‘As soon as the robot can grasp what humans want and what they are capable of doing themselves, it can help them in direct interaction,’ says Michael Beetz, spokesperson for the CRC EASE. ‘, After all, if we have no idea what is involved in such interactions, we cannot develop systems that act with and for people.’

The goal is to create new technological foundations for robotics and AI systems. ‘This requires new architectures that combine all these things: dialogue, action, perception, planning, reasoning, general knowledge, and partner modelling. All these aspects that are necessary for a new quality of human–machine interaction,’ says Beetz. With the help of this new representation in robots—an understanding of people, but above all of what they are doing—application scenarios arise that, according to the researchers, can form a basis for flexible and meaningful interaction between robots and people in everyday life.