How much easier do people find following a robot’s statements when it makes pauses in speech or produces filler sounds? This is the focus of a new study by Bielefeld and Bremen universities.

In the study, researchers recorded participants’ brain waves using electroencephalography (EEG) while they interacted with a robot via a screen. The Bremen LabLinking method was used to connect laboratories in Bielefeld and Bremen for the study.

During conversations, it is normal for a person to falter because they are either distracted or thinking. What impact does it have when robots imitate this behaviour in conversations with humans? And what influence does this have on human memory? A research team from Bielefeld and Bremen universities has investigated this. The research groups of Professor Dr Britta Wrede from the Medical School OWL at Bielefeld University and Professor Dr Tanja Schultz from the Department of Computer Science and Mathematics at the University of Bremen worked together on the study. Wrede’s research group specializes in medical assistance systems; Schultz’s group focuses on cognitive systems.

© Bielefeld University/M. Adamski

Since February 2022, the Bielefeld and Bremen scientists have been jointly researching whether the EEG currents in the human brain change significantly when distractions and hesitations occur in a human–robot interaction. The researchers hope to use their findings to draw conclusions about memory and learning effects in humans.

Study mimics real-life conversations

‘As a rule, the conditions in laboratory situations are ideal. So, in such cases, there are no distractions in human–robot interactions,’ says Britta Wrede. ‘We aim to develop a system with a virtual robot that is close to reality. Conversation partners are not always focused on the matter at hand. Our robot needs to be able to react to such inattentiveness,’ says Wrede. ‘For the study, we developed a multi-location setup for human–robot interaction allowing us to measure brain signals from test participants while they interact with a robot,’ says Tanja Schultz. ‘This helps us to better understand the underlying cognitive processes.’

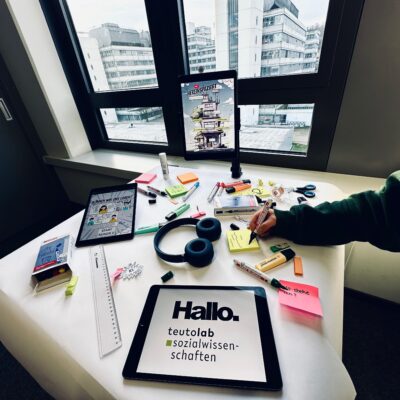

© Lehmkühler

Brain activities change when a person is distracted

In the study, test subjects engaged in a video meeting with the robot and were exposed to disturbing noises. ‘To regain the attention of a distracted person, the robot stopped talking, hesitated in its speaking, or interrupted with, for example, ‘Uh’ or other filler sounds.’

The live video of the robot called Pepper was streamed from Britta Wrede’s lab at Bielefeld University to Tanja Schultz’s lab at the University of Bremen. A test subject there followed the instructions of the robot viewed on a screen. Pepper explained how the subject should arrange certain things on the table. The person was distracted by, for example, noises in the laboratory in Bremen, and the robot in Bielefeld hesitated or interrupted its instructions. The test subject in Bremen wore an EEG cap to record and evaluate their brain waves. ‘Our assumption was that differences would be visible in the EEG when the robot hesitates—that is, interrupts its directions—compared to when it does not make pauses in speaking,’ said Britta Wrede. The assumption was confirmed. Tanja Schultz says: ‘The results of our study involving twelve people show that the EEG correlates are different in the distracted state than in the baseline state without any distractions.’

© University of Bremen

What role did the LabLinking method play?

The researchers used the LabLinking method invented by Tanja Schultz to conduct the experiment. ‘This method enables interdisciplinary joint research between experimental laboratories that are located far apart from each other,’ says Schultz. ‘This study could not have been carried out without LabLinking, because we had to connect the individual expertise and equipment of the two research teams and labs involved in real time to collectively achieve the goal.’

According to Schultz, the study shows that LabLinking enables human–robot interaction studies to be conducted in laboratories that are some distance from each other. ‘This creates an initial basis for more in-depth research on robotic scaffolding—the new approach to introducing robots to tasks with which they are unfamiliar,’ says Schultz. ‘Humans provide the robots with an initial orientation for the new learning situation, and this allows them to master the task in question independently.’

As with all LabLinking studies, the results are made available to researchers worldwide on the open platform ‘openEASE’. One virtue of the platform: ‘We can extract information about human activities from this database so that robots can better perform human activities in the future and learn to understand,’ says Schultz.

Further research is needed in future to determine how far the robot’s hesitation or interruption in the presence of distractions or disturbances such as noise also leads to a better understanding of the task by humans, and to what degree this is shown in the EEG. ‘We are aiming to teach the robot to notice itself whether or not its hesitation is helpful for a human’s learning success, and to adapt its behaviour accordingly,’ says Britta Wrede. This knowledge could be used in care situations, for example. ‘When interacting with a person with dementia, the robot would notice when that person needs assistance or, for example, simply more time to process what is being said.’ An individual’s independence should be preserved and encouraged. ‘The robot would therefore give only as much help as is necessary,’ says Wrede.