For people, giving an explanation is something that goes without saying, but for artificial intelligence, it is no ordinary task. The back-and-forth between questions, answers, and follow-up questions taxes even the most sophisticated algorithms. At Bielefeld University, artificial intelligence is now being equipped to handle such dialogues. This work brings together researchers from computer science, robotics, medicine, neurobiology, linguistics, psychology, sports science, and sociology. While this research is still in the early days, the potential areas of application are manifold, including, for instance, medical diagnostics. Importantly, such systems could also be used to help bring greater transparency to the decisions made by artificial intelligence.

How complex human abilities are often only becomes apparent when put under examination. The sheer complexity of a simple explanation, for example, goes by without notice most of the time. Just how complex such a process is, however, becomes evident when attempting to transfer it to artificial intelligence. These days, anyone who asks such a computer for an explanation, such as an AI-supported voice assistant of the kind that has now found its way into many households – usually gets a prewritten text read aloud to them. But responses to questions? Error message.

Professor Dr-Ing. Britta Wrede, who works on medical assistance systems at Bielefeld University’s Medical School OWL, and Professor Dr Philipp Cimiano, who studies Semantic technologies as part of Bielefeld University’s Faculty of Technology, are aiming to replace this error message with AI that is capable of such complex interactions. This type of interaction is known as “co-construction,” and it facilitates deep comprehension at the linguistic level.

‘From the simple meaning of the word “co-construction”, we know that something is created together, and what is being co-constructed here are explanations,’ says Cimiano. ‘An explanation doesn’t just exist somewhere. It’s not something that is just lying around that you can just record and play back. It’s something that is co-created.’ It is not enough to simply give an explanation to the person asking the question and then assume that they will then understand it, adds Wrede. ‘When something is explained in a conversation, the person asking the question – in English we say “explainee” – asks about something that is unclear to them.’ This results in a highly individualised interaction between the explainer and the explainee. ‘Accordingly, aspects of the questions that are asked also become particularly relevant for the explainer, who can use the question to get an idea of what misunderstandings might be occurring for the explainee,’ Wrede continues.

Only together can both the explainer and explainee reach their goal – and this process can also fail. ‘Co-construction can’t take place if the explanation makes so little sense that the explainee isn’t even able to ask a follow-up question’. However, if a robust interaction is taking place – one that consists of questions and answers – both partners co-construct the explanation together. ‘Everyone who has tried to explain something to someone knows that the explanation is created in the moment – and this is what we mean when we talk about co-construction,’ says Cimiano.

Co-construction: understanding and active questioning

What is it that makes this kind of interactive explanation so important? After all, it is also possible to acquire knowledge from books, for instance. For Wrede, co-construction offers clear advantages – not the least of which is that the explanation is individually tailored to the explainee. ‘The process also allows explainees to take an active role in the process. Studies have shown that taking an active role is an important component of achieving understanding. This is because fewer cognitive resources are activated when a person just passively listens to an explanation. In contrast, cognitive processes are activated when people actively engage in questioning, such as formulating potential alternative explanations. In this way, they can attempt to make connections, and in doing so, may encounter things they don’t understand, for which concrete follow-up questions can then be asked.’

This active role also puts the explainee in a better position to apply what has been explained to their own problem and translate it into action. ‘If, for instance, a patient receives information on a medical finding by way of co-construction, the patient has already asked many questions during the explanation process and has already thought a lot about many possible connections. This makes it easier for the patient to later make a decision about an operation or a medical treatment,’ says Wrede.

Help with medical diagnoses

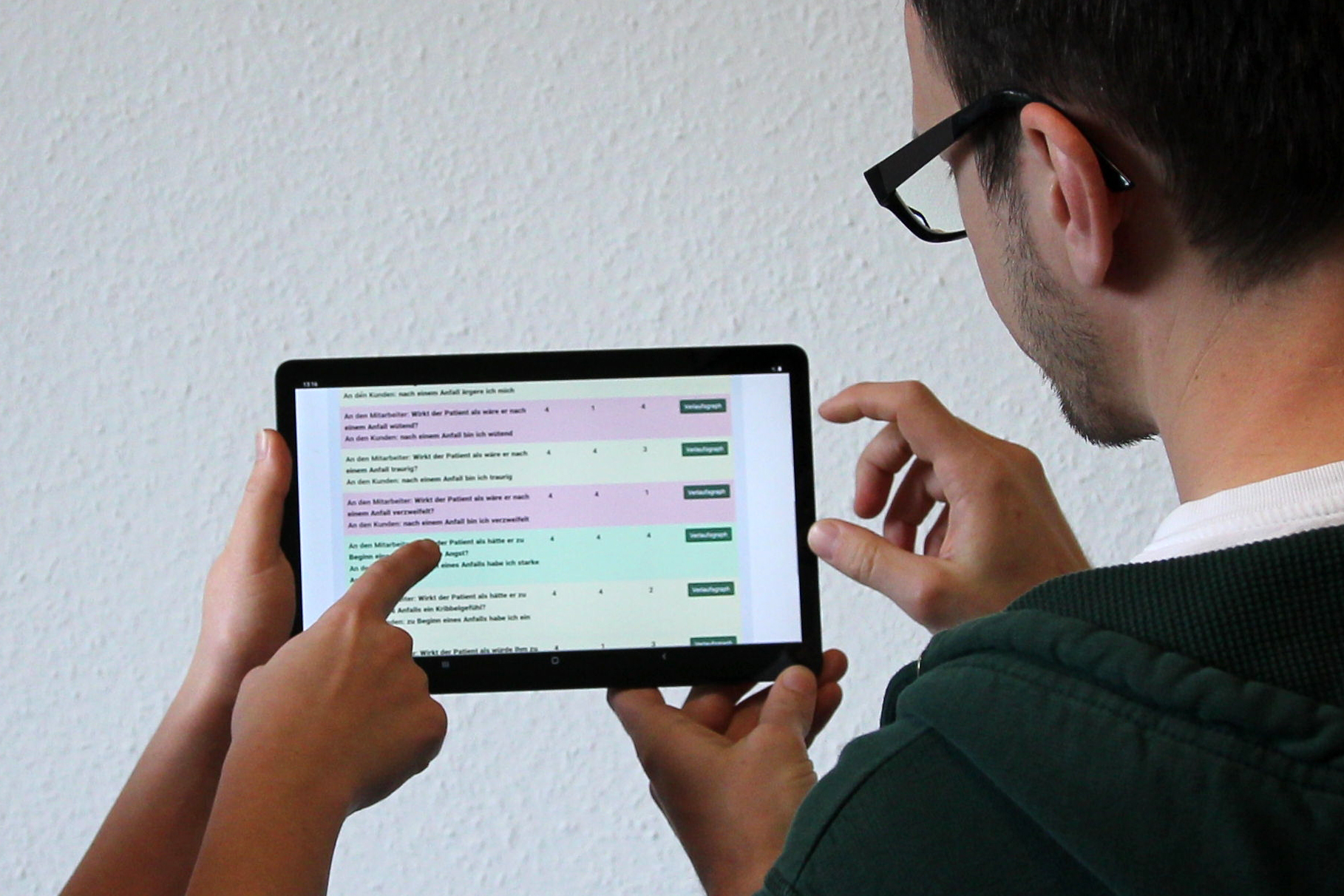

Just how such a system could work in practice is something that Cimiano and his team have given a great deal of thought: ‘One of our use cases is the medical diagnosis of epilepsy, which is a rather serious illness that is quite difficult to diagnose. The diagnosis depends on many different factors, such as how many seizures the patient has, how intense they are, in which stages they occur, or what exactly happens during the seizures.’

The researchers are now working to develop an assistance system for physicians. ‘We are working to get to equal interaction,’ explains Professor Cimiano. The AI system needs to have a concrete understanding of the medical field and be able to pose questions based on this background knowledge. A dialogue might unfold as follows: the system asks whether certain causes of a disease have already been ruled out. ‘The doctor could then say, yes, we have excluded those causes,’ explains Cimiano. ‘The machine can then respond by saying, ok, then we have a case of epilepsy here, with 80 percent probability. The doctor can then ask why this is the case, and so on.’ During this process, the system should not impose on the human user: decisions are always made by the doctor, who is ultimately the one responsible. ‘The system should, however, give the doctor enough information that they can make a decision in good conscience’.

Professor Wrede and her research colleagues want to analyse EKG-data with artificial intelligence in order to detect possible atrial fibrillation. They are working together on this with Find-AF 2, a collaborative research study in which neurologist Professor Dr. med. Wolf-Rüdiger Schäbitz of the Protestant Bethel Hospital and the Medical School OWL is taking part. ‘In our study, we are looking whether this AI approach to a co-constructive explanatory process can even generate new insights for medical professionals.’ As part of this, current explanatory AI algorithms could already indicate which criteria led them to a decision. ‘Theoretically, if medical experts were able to interrogate the system in a more interactive way, it is quite possible that they would encounter new information and relationships in the data that they had not previously encountered.’ Whether this is actually possible, though, remains to be seen.’

Examples of where co-construction is being studied

Kinbiotics collaborative research project

The overuse of broad-spectrum antibiotics is leading to many problems, including increased resistance to pathogens. Under the direction of Bielefeld University, researchers are working on an AI-powered assistance system that will support healthcare professionals in making decisions about antibiotic therapies. It is intended to provide recommendations for individual patients with the goal of maximizing efficacy and minimizing side effects. The Federal Ministry of Health has been funding the project since 2020. Among the participating project partners are the three member hospitals of the OWL University Hospital.

‘Bots Building Bridges’ collaborative research project

In this project coordinated by Bielefeld University, computer scientists and sociologists are working together to investigate how AI can be used to counter malicious bots that spread fake news and hate speech. Given that bots are part of the problem, can they also be part of the solution? Could bots also be used to combat fake news and malicious speech on the internet? This project will develop and provide an online application that operates by way of co-construction to help improve the culture of debate on the internet. This collaborative research project has been funded by the Volkswagen Foundation since 2020.

NRW Research Centre ‘Designing Flexible Work Environments’

What aspects need to be considered in the design of intelligent systems to positively impact people in the workplace? Bielefeld University and Paderborn University are pursuing this question as part of the North Rhine-Westphalian Research Centre ‘Designing Flexible Work Environments’. In unfavorable scenarios, humans become the tools of AI and must adapt to its dictates. In contrast, AI can also be used to enhance human abilities, enabling people to solve not only greater numbers of tasks, but also qualitatively more demanding work than would have otherwise been possible without AI. This research centre has been funded by the Ministry of Culture and Science of the federal state of North Rhine-Westphalia since 2014.

‘Robust Argumentation Systems’ Priority Programme

How can computers understand and produce arguments? Multitudes of discussions are taking place on the internet, whether in media outlets and portals, or on social media sites. In this Priority Programme, researchers are working to develop artificial intelligence systems that understand debates and can summarize them for humans. Co-construction comes into play when users ask the systems to break down the arguments of a debate and assign them to the respective sides of the different camps. This AI system is also intended to be able to produce arguments in response to a question, indicating what speaks both for and against – such as in the debate around a vaccination mandate. This Priority Programme is being coordinated by Bielefeld University and has been funded by the German Research Foundation since 2017.

JAII – Joint Artificial Intelligence Institute

Bielefeld University and Paderborn University founded this institute together in 2020. JAII researchers are dedicated to developing AI systems that augment human expertise by interactively assisting in decision-making processes. JAII serves to promote cooperation between the two universities, pool resources, and acquire joint collaborative projects with its own AI research strategy, with a particular focus on co-construction between humans and machines.

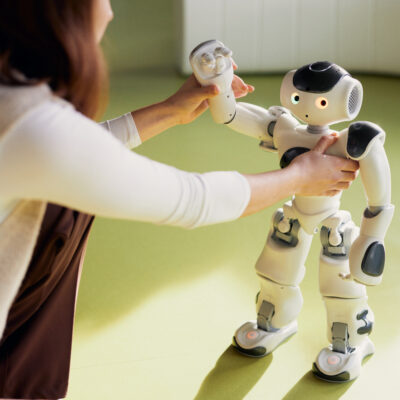

CITEC – Center for Cognitive Interaction Technology

At CITEC, a research institute located at Bielefeld University, researchers from the fields of computer science, biology, linguistics and literary studies, mathematics, psychology, and sports science are studying interaction and communication between humans and machines. CITEC is one of the central academic institutes of Bielefeld University. Strategic partners include the household appliance manufacturer Miele, the Bertelsmann corporation, the Honda Research Institute, and the v. Bodelschwingh Bethel Foundation. From 2007–2019, CITEC was part of the Excellence Initiative of the federal and state governments of Germany. Since its founding, CITEC has been dedicated to basic research for intelligent technical systems marked by capabilities for social interaction, artificial intelligence, and situationally dependent, flexible action. Current projects on co-construction link theoretical concepts with technological innovations coming out of this research center.